By analyzing neural signals, a brain-computer interface (BCI) can now almost instantaneously synthesize the speech of a man who lost use of his voice due to a neurodegenerative disease, a new study finds.

The researchers caution it will still be a long time before such a device, which could restore speech to paralyzed patients, will find use in everyday communication. Still, the hope is this work “will lead to a pathway for improving these systems further—for example, through technology transfer to industry,” says Maitreyee Wairagkar, a project scientist at the University of California Davis’s Neuroprosthetics Lab.

A major potential application for brain-computer interfaces is restoring the ability tocommunicate to people who can no longer speak due to disease or injury. For instance, scientists have developed a number of BCIs that can help translate neural signals into text.

However, text alone fails to capture many key aspects of human speech, such as intonation, that help to convey meaning. In addition, text-based communication is slow, Wairagkar says.

Now, researchers have developed what they call a brain-to-voice neuroprosthesis that can decode neural activity into sounds in real time. They detailed their findings 11 June in the journal Nature.

“Losing the ability to speak due to neurological disease is devastating,” Wairagkar says. “Developing a technology that can bypass the damaged pathways of the nervous system to restore speech can have a big impact on the lives of people with speech loss.”

Neural Mapping for Speech Restoration

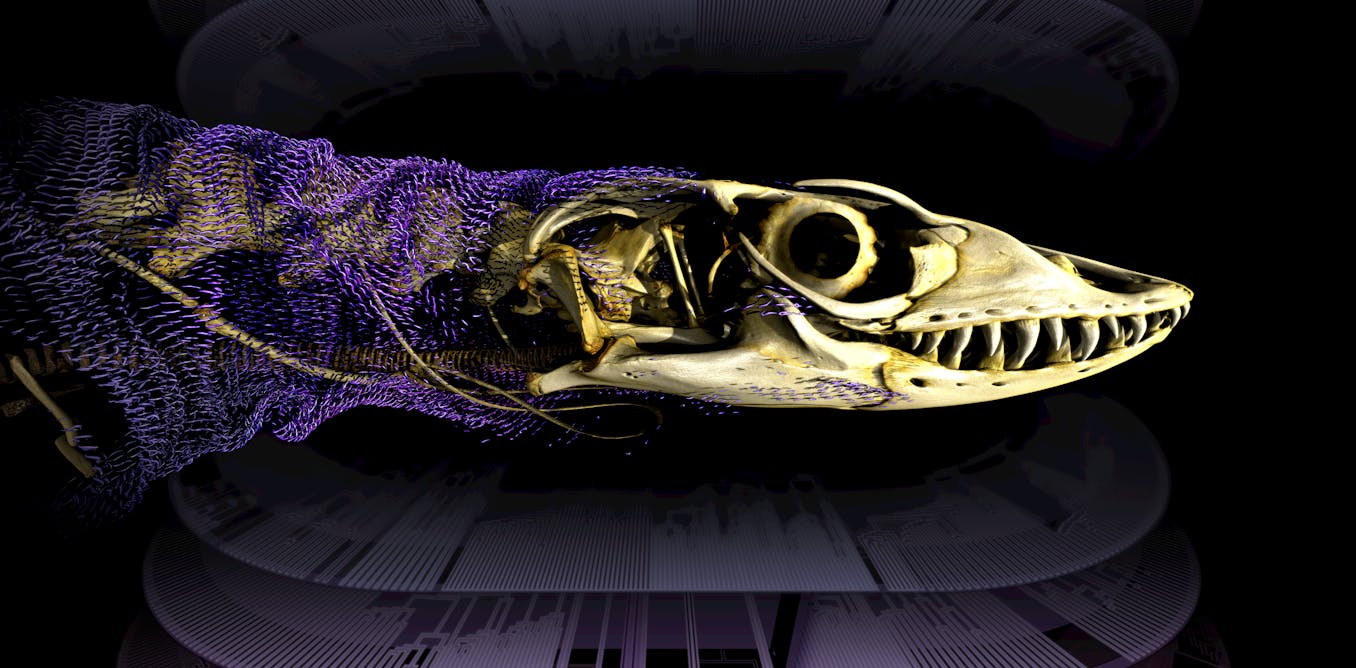

The new BCI mapped neural activity using four microelectrode arrays. In total, the scientists placed 256 microelectrode arrays in three brain regions, chief among them the ventral precentral gyrus, which plays a key role in controlling the muscles underlying speech.

“This technology does not ‘read minds’ or ‘read inner thoughts,’” Wairagkar says. “We record from the area of the brain that controls the speech muscles. Hence, the system only produces voice when the participant voluntarily tries to speak.”

The researchers implanted the BCI in a 45-year-old volunteer with amyotrophic lateral sclerosis (ALS), the neurodegenerative disorder also known as Lou Gehrig’s disease. Although the volunteer could still generate vocal sounds, he was unable to produce intelligible speech on his own for years before the BCI.

The neuroprosthesis recorded the neural activity that resulted when the patient attempted to read sentences on a screen out loud. The scientists then trained a deep-learning AI model on this data to produce his intended speech.

The researchers also trained a voice-cloning AI model on recordings made of the patient before his condition so the BCI could synthesize his pre-ALS voice. The patient reported that listening to the synthesized voice “made me feel happy, and it felt like my real voice,” the study notes.

Real-Time Speech from Brain Signals Achieved

The post “Real-Time Speech from Brain Signals Achieved” by Charles Q. Choi was published on 06/19/2025 by spectrum.ieee.org

Leave a Reply