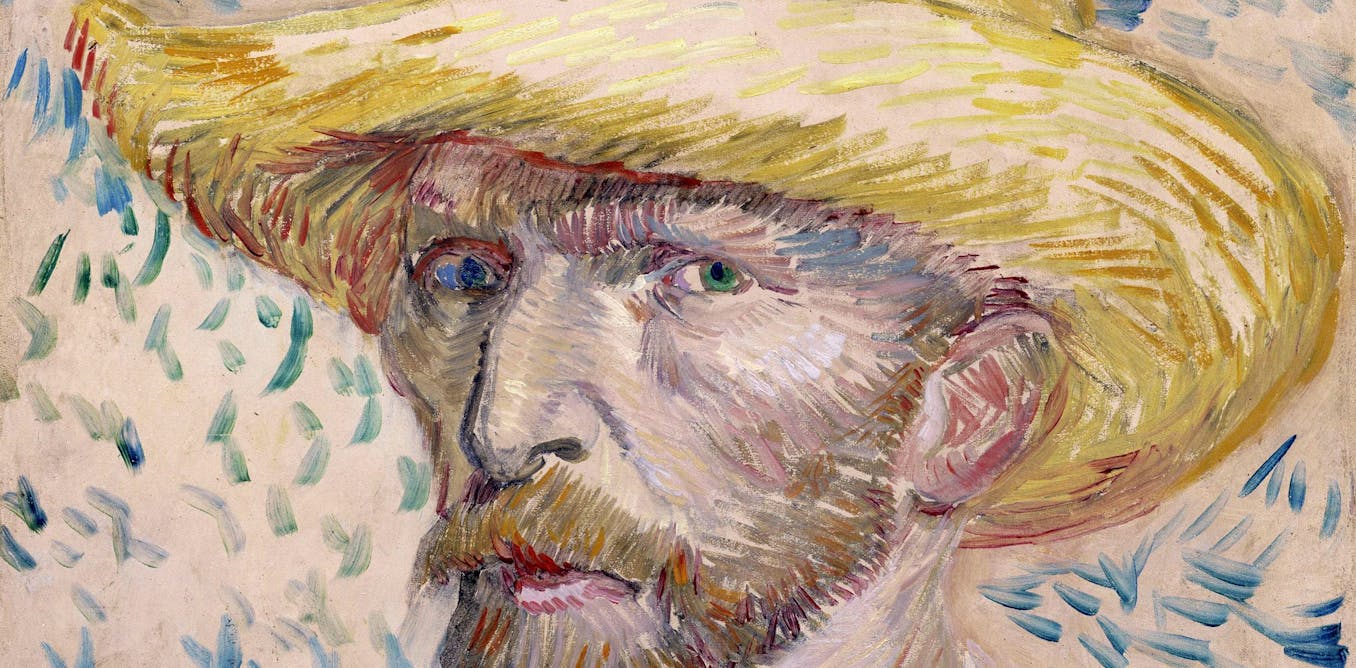

The Nobel Prize Committee for Physics caught the academic community off-guard by handing the 2024 award to John J. Hopfield and Geoffrey E. Hinton for their foundational work in neural networks.

The pair won the prize for their seminal papers, both published in the 1980s, that described rudimentary neural networks. Though much simpler than the networks used for modern generative AI like ChatGPT or Stable Diffusion, their ideas laid the foundations on which later research built.

Even Hopfield and Hinton didn’t believe they’d win, with the latter telling The Associated Press he was “flabbergasted.” After all, AI isn’t what comes to mind when most people think of physics. However, the committee took a broader view, in part because the researchers based their neural networks on “fundamental concepts and methods from physics.”

“Initially, I was surprised, given it’s the Nobel Prize in Physics, and their work was in AI and machine learning,” says Padhraic Smyth, a distinguished professor at the University of California, Irvine. “But thinking about it a bit more, it was clearer to me why [the Nobel Prize Committee] did this.” He added that physicists in statistical mechanics have “long thought” about systems that display emergent behavior.

Hopfield first explored these ideas in a 1982 paper on neural networks. He described a type of neural network, later called a Hopfield network, formed by a single layer of interconnected neurons. The paper, which was originally categorized under biophysics, said a neural network could retain “memories” from “any reasonably sized subpart.”

Hinton expanded on that work to conceptualize the Boltzmann machine, a more complex neural network described in a 1985 paper Hinton co-authored with David H. Ackley and Terrence J. Sejnowski. They introduced the concept of “hidden units,” additional layers of neurons which exist between the input and output layers of a neural network but don’t directly interact with either. This makes it possible to handle tasks that require a more generalized understanding, like classifying images.

So, what’s the connection to physics?

Hopfield’s paper references the concept of a “spin glass,” a material in which disordered magnetic particles lead to complex interactions. Hinton and his co-authors drew on statistical mechanics, a field of physics that uses statistics to describe the behavior of particles in a system. They even named their network in honor of Ludwig Boltzmann, the physicist whose work formed the foundation of statistical mechanics.

And the connection between neural networks and physics isn’t a one-way street. Machine learning was crucial to the discovery of the Higgs boson, where it sorted the data generated by billions of proton collisions. This year’s Nobel Prize for Chemistry further underscored machine learning’s importance in research, as the award went to a trio of scientists who built an AI model to predict the…

Read full article: Nobel Prize in Physics: Why It Went to AI Researchers

The post “Nobel Prize in Physics: Why It Went to AI Researchers” by Matthew S. Smith was published on 10/12/2024 by spectrum.ieee.org

Leave a Reply