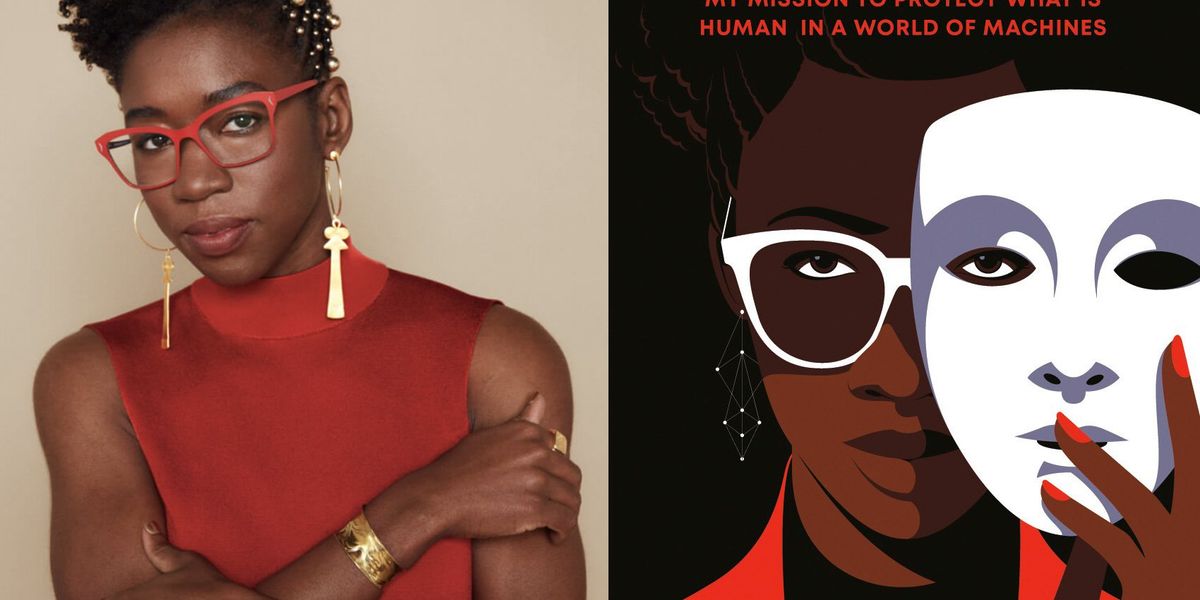

Joy Buolamwini‘s AI research was attracting attention years before she received her Ph.D. from the MIT Media Lab in 2022. As a graduate student, she made waves with a 2016 TED talk about algorithmic bias that has received more than 1.6 million views to date. In the talk, Buolamwini, who is Black, showed that standard facial detection systems didn’t recognize her face unless she put on a white mask. During the talk, she also brandished a shield emblazoned with the logo of her new organization, the Algorithmic Justice League, which she said would fight for people harmed by AI systems, people she would later come to call the excoded.

In her new book, Unmasking AI: My Mission to Protect What Is Human in a World of Machines, Buolamwini describes her own awakenings to the clear and present dangers of today’s AI. She explains her research on facial recognition systems and the Gender Shades research project, in which she showed that commercial gender classification systems consistently misclassified dark-skinned women. She also narrates her stratospheric rise—in the years since her TED talk, she has presented at the World Economic Forum, testified before Congress, and participated in President Biden’s roundtable on AI.

While the book is an interesting read on a autobiographical level, it also contains useful prompts for AI researchers who are ready to question their assumptions. She reminds engineers that default settings are not neutral, that convenient datasets may be rife with ethical and legal problems, and that benchmarks aren’t always assessing the right things. Via email, she answered IEEE Spectrum‘s questions about how to be a principled AI researcher and how to change the status quo.

One of the most interesting parts of the book for me was your detailed description of how you did the research that became Gender Shades: how you figured out a data collection method that felt ethical to you, struggled with the inherent subjectivity in devising a classification scheme, did the labeling labor yourself, and so on. It seemed to me like the opposite of the Silicon Valley “move fast and break things” ethos. Can you imagine a world in which every AI researcher is so scrupulous? What would it take to get to such a state of affairs?

Joy Buolamwini: When I was earning my academic degrees and learning to code, I did not have examples of ethical data collection. Basically if the data were available online it was there for the taking. It can be difficult to imagine another way of doing things, if you never see an alternative pathway. I do believe there is a world where more AI researchers and practitioners exercise more caution with data-collection activities, because of the engineers and researchers who reach out to the Algorithmic Justice League looking for a better way. Change starts with conversation, and we are having important conversations today about data provenance, classification systems, and AI harms that when I started this work…

Read full article: Why AI Should Move Slow and Fix Things

The post “Why AI Should Move Slow and Fix Things” by Eliza Strickland was published on 11/27/2023 by spectrum.ieee.org