There are growing calls for young people under the age of 16 to be banned from having smartphones or access to social media. The Smartphone Free Childhood WhatsApp group aims to normalise young people not having smartphones until “at least” 14 years old. Esther Ghey, mother of the murdered teenager Brianna Ghey, is campaigning for a ban on social media apps for under-16s.

The concerns centre on the sort of content that young people can access (which can be harmful and illegal) and how interactions on these devices could lead to upsetting experiences.

However, as an expert in young people’s use of digital media, I am not convinced that bans at an arbitrary age will make young people safer or happier – or that they are supported by evidence around young people’s use of digital technology.

In general, most young people have a positive relationship with digital technology. I worked with South West Grid for Learning, a charity specialising in education around online harm, to produce a report in 2018 based upon a survey of over 8,000 young people. The results showed that just over two thirds of the respondents had never experienced anything upsetting online.

Large-scale research on the relationship between social media and emotional wellbeing concluded there is little evidence that social media leads to psychological harm.

Sadly, there are times when young people do experience upsetting digital content or harm as a result of interactions online. However, they may also experience upsetting or harmful experiences on the football pitch, at a birthday party or playing Pokémon card games with their peers.

It would be more unusual (although not entirely unheard of for adults to be making calls to ban children from activities like these. Instead, our default position is “if you are upset by something that has happened, talk to an adult”. Yet when it comes to digital technology, there seems to be a constant return to calls for bans.

We know from attempts at prevention of other areas of social harms, such as underage sex or access to drugs or alcohol, that bans do not eliminate these behaviours. However, we do know that bans will mean young people will not trust adults’ reactions if they are upset by something and want to seek help.

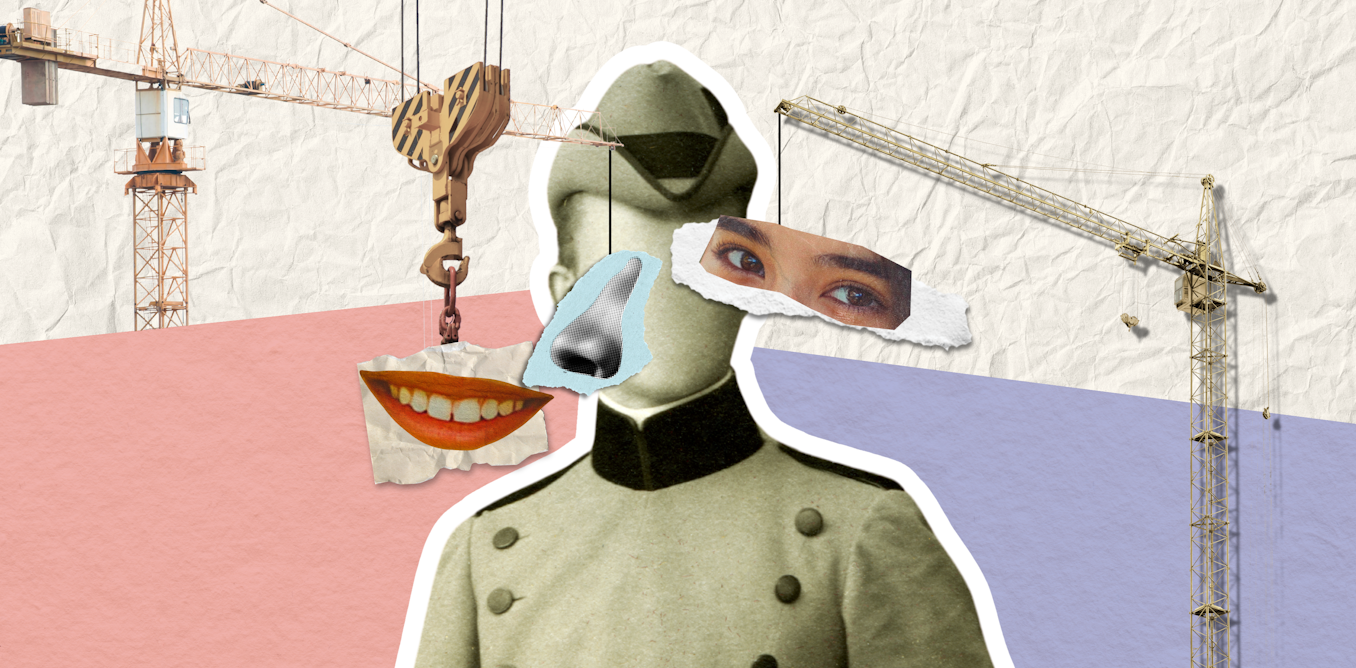

Studio Romantic/Shutterstock

I recall delivering an assembly to a group of year six children (aged ten and 11) one Safer Internet Day a few years ago. A boy in the audience told me he had a YouTube channel where he shared video game walkthroughs with his friends.

I asked if he’d ever received nasty comments on his platform and if he’d talked to any staff about it at his school. He said he had, but he would never tell a teacher because “they’ll tell me off for having a YouTube channel”.

This was confirmed after the assembly by the headteacher, who said they told young people not to do things on YouTube because it was dangerous. I suggested that empowering what was generally a positive experience might result in the young man being more confident to talk about negative comments – but was met with confusion and repetition of “they shouldn’t be on there”.

Need for trust

Young people tell us that two particularly important things they need in tackling upsetting experiences online are effective education and adults they can trust to talk to and be confident of receiving support from. A 15 year old experiencing abuse as a result of social media interactions would likely not be confident to disclose if they knew the first response would be, “You shouldn’t be on there, it’s your own fault.”

There is sufficient research to suggest that banning under-16s having mobile phones and using social media would not be successful. Research into widespread youth access to pornography from the Children’s Commissioner for England, for instance, illustrates the failures of years of attempts to stop children accessing this content, despite the legal age to view pornography being 18.

The prevalence of hand-me-down phones and the second hand market makes it extremely difficult to be confident that every mobile phone contract accurately reflects the age of the user. It is a significant enough challenge for retailers selling alcohol to verify age face to face.

The Online Safety Act is bringing in online age verification systems for access to adult content. But it would seem, from the guidance by communications regulator Ofcom, that the goal is to show that platforms have demonstrated a duty of care, rather than being a perfect solution. And we know that age assurance (using algorithms to estimate someone’s age) is less accurate for under-13s than older ages.

By putting up barriers and bans, we erode trust between those who could be harmed and those who can help them. While these suggestions come with the best of intentions, sadly they are doomed to fail. What we should be calling for is better understanding from adults, and better education for young people instead.

The post “Why bans on smartphones or social media for teenagers could do more harm than good” by Andy Phippen, Professor of IT Ethics and Digital Rights, Bournemouth University was published on 02/21/2024 by theconversation.com