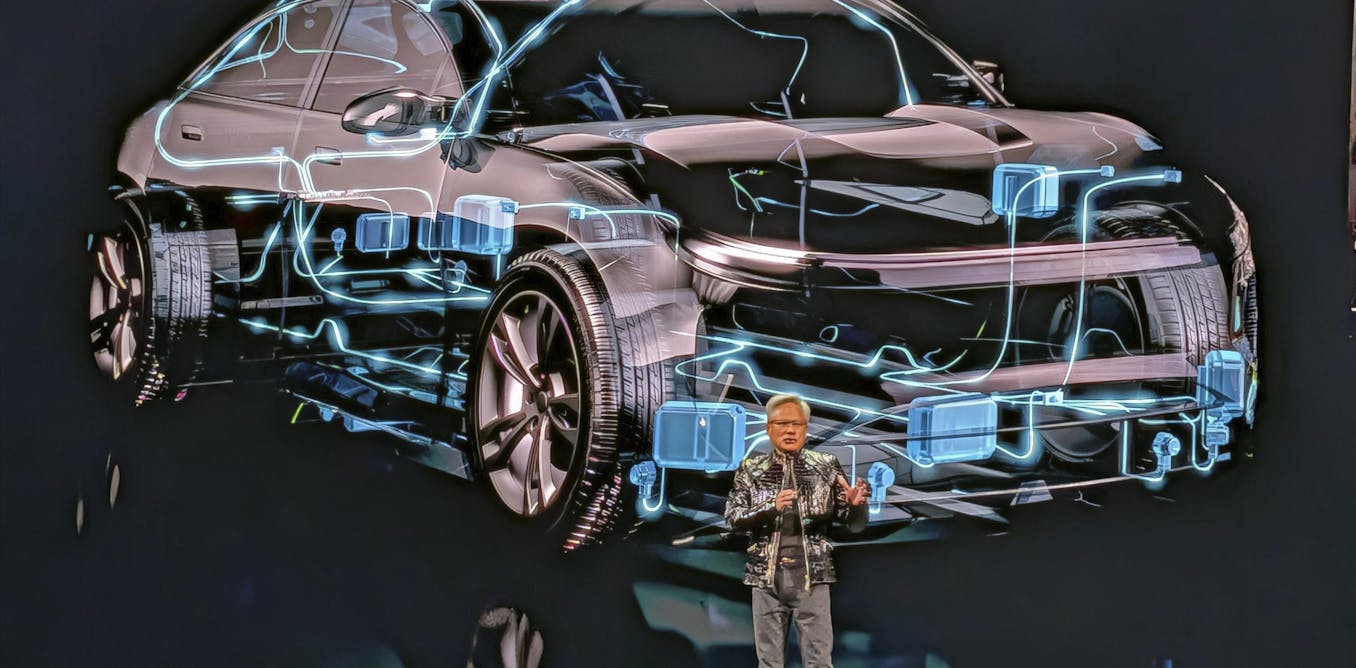

Two ‘godfathers’ of AI add their voices to a group of experts warning there’s potential to lose control of AI systems if action isn’t taken soon.

In July 2023, Dr Geoffrey Hinton made headlines by departing his job at Google to warn of the dangers of artificial intelligence. Now, a group that also includes Yoshua Bengio, another one of the three academics who have won the ACM Turing award, and a group of 25 senior experts, is warning AI systems could spiral out of control if AI safety isn’t taken more seriously in a newly-published paper.

“Without sufficient caution, we may irreversibly lose control of autonomous AI systems, rendering human intervention ineffective,” warns the paper. “Large-scale cybercrime, social manipulation, and other harms could escalate rapidly. This unchecked AI advancement could culminate in a large-scale loss of life and the biosphere, and the marginalization or extinction of humanity.

“We are not on track to handle these risks well. Humanity is pouring vast resources into making AI systems more powerful but far less into their safety and mitigating their harms.”

The group has stated that only an estimated 1-3% of AI publications are on safety, with greater focus being put on AI advancement, rather than safety regulation.

Why do we need AI safety?

As well as encouraging more research into AI safety, the group directly challenges global governments to “enforce standards that prevent recklessness and misuse”. The paper points to existing areas, such as pharmaceuticals, financial systems, and nuclear energy, where government oversight is already used to the advantage of corporations. It suggests that similar dangers could be exposed within the AI sector.

While China, the European Union, the United States, and the United Kingdom are applauded for taking the first steps in AI governance, the group writes that these early measures “fall critically short in view of the rapid progress in AI capabilities”.

“We need governance measures that prepare us for sudden AI breakthroughs while being politically feasible despite disagreement and uncertainty about AI timelines,” it continues. “The key is policies that automatically trigger when AI hits certain capability milestones.”

Although the group writes that it’s not too late to implement mitigation and failsafe policies, the urgency in the paper is clear. The group of AI experts urges governments around the world to act now, with fear that AI could overtake human intervention soon.

Featured image: Ideogram

The post “Godfathers of AI warn we may ‘lose control’ of AI systems without intervention” by Rachael Davies was published on 05/21/2024 by readwrite.com